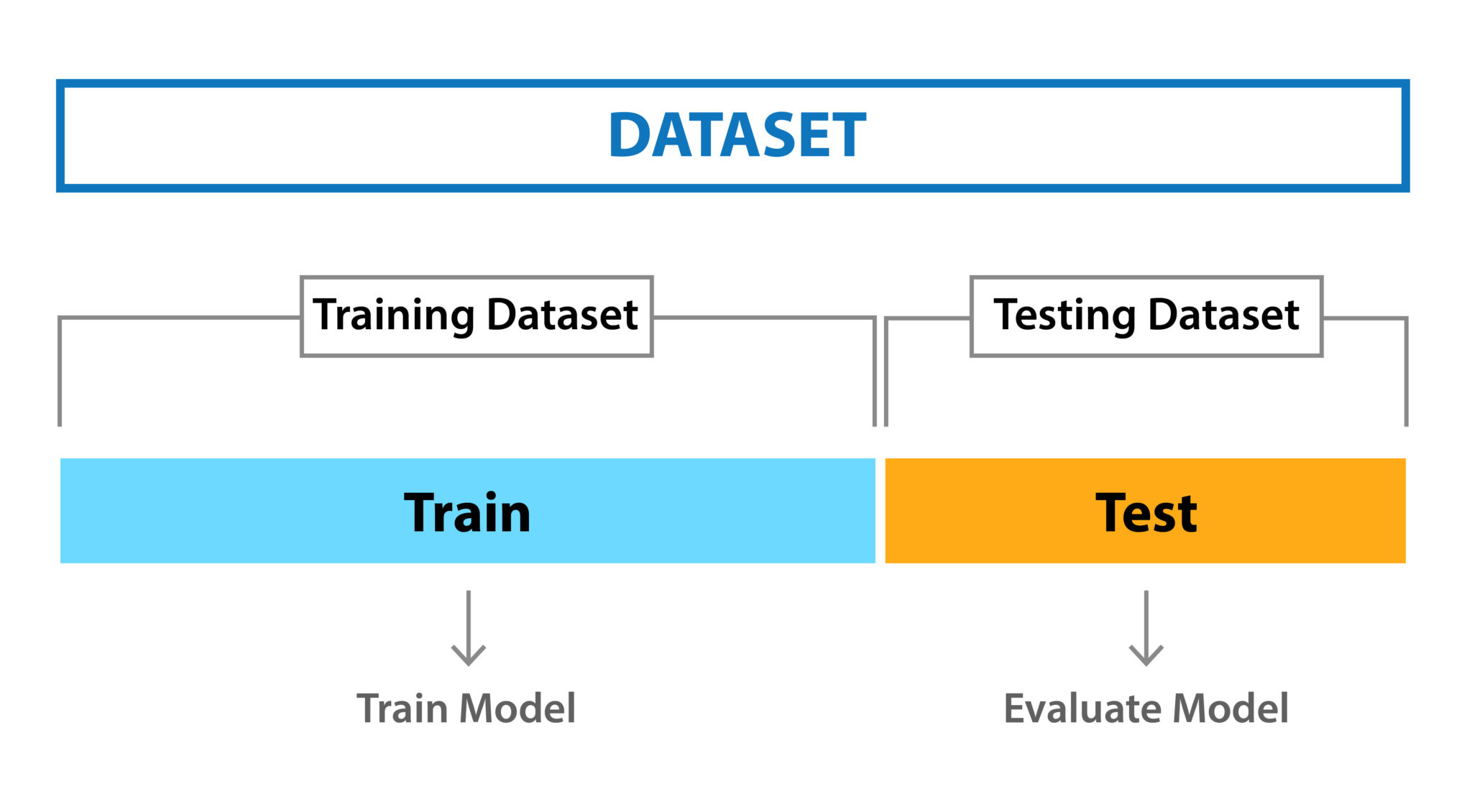

Cross-Validation is a resampling method used in machine learning to assess the accuracy of a model. It is an iterative process, where the data is split into subsets, which are then used as independent training and testing sets.

This allows us to measure the performance of our model with unseen data. The goal of Cross-Validation is to provide an accurate estimate of how well a given model will perform on unseen data.

Common Type of Cross-Validation

The most common type of Cross-Validation is k-fold Cross-Validation, which splits the original dataset into “k” equal parts, or folds, and then uses each fold as a validation set once, while using all other folds as training sets.

An average performance score across all k iterations is then used as the final result for the model’s accuracy. A benefit of using this approach over traditional train/test splitting techniques (such as holdout testing) is that it reduces variance in our performance estimates by ensuring that each observation has been used both as a training and validation point at least once in the evaluation process.

This leads to more reliable results, since it eliminates any bias from randomly selecting certain samples for use in only one part or subset of our data. In addition, when dealing with smaller datasets – such as those found in many areas of healthcare and medical research – k-fold Cross-Validation can help us maximize our resources by utilizing all available observations for both training and testing purposes.

Statistical Technique

This helps increase the accuracy of our models when there are limited amounts of data points available for analysis. Cross-validation (CV) is a statistical technique used to evaluate the performance of a model or algorithm on a given dataset.

It involves splitting the dataset into subsets, training the model on a portion of the data, and then testing it on the remaining data. CV is widely used in machine learning and data science algorithms. Advantages. One of the major advantages of CV is that it helps to avoid overfitting. Overfitting occurs when a model is trained on a particular dataset, and it performs very well on that dataset alone, but not on other datasets.

By using CV, the model is trained on various subsets of data, and tested on different subsets, which ensures that it can generalize well on new data. Another advantage of CV is that it helps in selecting the best model or algorithm for a particular problem. By comparing the performance of different models on different subsets of data, one can determine which model works best on a given dataset. CV also helps in tuning the hyperparameters of a model, which are important in determining the final performance of the model.

Advantages and Disadvantages

Disadvantages: One of the main disadvantages of CV is that it can be computationally expensive. This is due to the fact that it involves training and testing the model multiple times on different subsets of data.

This can be a problem for large datasets or complex models, where each iteration may take a long time to complete. Another disadvantage of CV is that it can lead to biased results if not done properly. This can happen if the dataset is not representative of the population, or if the subsets are not selected randomly. To avoid bias, it is important to ensure that the subsets are selected randomly, and that they are representative of the population.

Overall, Cross-Validation provides an effective way to assess and validate models on unseen datasets without compromising computational power or resource availability. By carefully selecting its size and proportions according to specific requirements, we can ensure that our models are robust enough to accurately predict future outcomes with greater consistency than traditional methods allow.

Conclusion

In conclusion, while CV has several advantages in model evaluation and selection, it also has a few drawbacks which must be carefully considered before use. However, with proper implementation, CV can be an effective tool in machine learning and data science.